CS8001 - ODA Notes

Problem Sets - what to expect

- The questions will be quite similar to the reality checks being made up primarily of multiple choice and code snippets.

- There's a good bit of questions ranging between 1 to 2 dozen questions.

- The questions will show whether they are correct or incorrect

Table of Contents

M3 - Stacks/Queue ADTs

Priority Queues

We will very briefly discuss a tangentially related concept to Queues, called the Priority Queue. The topic is placed here to help broaden you awareness of the ideas that can emerge from the topic. This is an important growth mindset technique to think about data structures and behavior in order to create different structures. We will not be discussing the typical implementation of them until later in Module 6

Priority Queues: A Linear Model?

In the video, we briefly mentioned that Priority Queues are a linear ADT. This is partially true since we can think of the data being placed linearly in sorted order based on priority. For queues, this was precisely the case, except that the priority was the order in which data was enqueued. However, in practice, a linear model doesn't work very well for implementing Priority Queues. Let's consider the idea of implementing a Priority Queue by maintaining a sorted list. We have two options to consider, an array-backed sorted list or a linked sorted list.

For the array-backed version, it would similar to an ArrayQueue where the element with the highest priority could be at the front, while the element with lowest priority could be at the back. With this model, dequeuing and peeking would be 𝑂(1) operations like in the ArrayQueue. However, in general, enqueuing would be an 𝑂(𝑛) procedure, even if we consider amortizing the resize cost. This is because if we wanted to enqueue, we'd need to maintain the sorted order of the data, so we'd need to shift data around to place it. There are other variations we could use such as flipping the priority order and keeping the data zero-aligned, but they all lead us to similar conclusions.

For the linked version, it would again be similar to a LinkedQueue where the element with highest priority would be at the head while the element with lowest priority would be at the tail. Once again, peeking and dequeuing operations are 𝑂(1), but enqueuing would be an 𝑂(𝑛) operation since we need to find the location to insert the data.

So, with our current range of ideas, it appears that implementing a Priority Queue efficiently is out of reach. We will begin developing the ideas that we do need starting with Module 6, in the near future!

Deques (double-ended queue “deck”)

We end the section by discussing a natural extension of both Stacks and Queues, the Deque ADT. Rather than specializing in a specific add and remove behavior like Stacks and Queues, Deques support add and remove operations from either end of the data. The techniques involved in implementing the deque have already been discussed, so we will simply upcycle the ideas to show how it can be done.

Found in online purchasing system.

Java's % Operator

CSVIS

M4 - BST Introduction

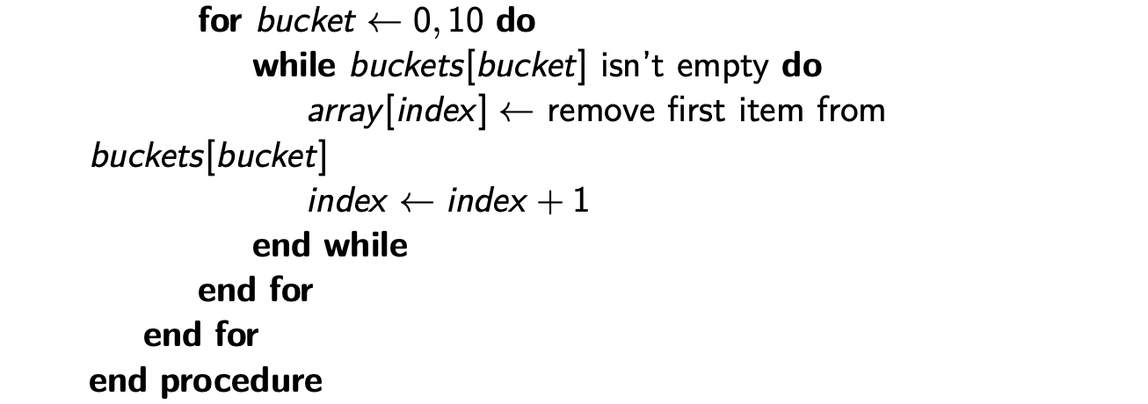

Introduction to Tree ADT

- Stacks, queues and deques are limited by their ADT operations (linear)

- Trees are a type of linked lists

- Trees are typically not bidirectional

- Node with children are internal nodes, nodes with no children are leaf nodes (or external nodes)

- No concept of front and back since they aren’t linear

Was that an ADT?

In the first few modules, we made a large distinction between ADTs and data structures. To be clear, ADTs are high-level descriptions of a model, whereas a data structure is the low-level, detail-oriented implementation of a model. Up until now, the distinction was stark because there were multiple implementations for each ADT, and it served as a nice way of introducing the concepts.

However, moving forward, we'll be much looser with that distinction. We do this for multiple reasons, however, the primary reason is that the distinction will more inhibit learning than helping it in the topics to come. Some of the upcoming topics can be difficult to grasp. We would prefer for you to focus on these topic complexities, rather than worry about the semantics of whether a Tree is an ADT or a data structure (it can be either depending on the language used). We will still distinguish between the ADTs in the diagrams shown in the module review sections, but they are not as important as they were in previous modules.

Binary Trees

- Each node has minimum 3 components, data and reference to its left / right children.

Binary Search Trees

- Left node must be smaller than parent node, right node must be greater than parent node.

- Big-O:

- Average: BST divides data in half perfectly at each level. Insertion + search + deletion are all O(log n).

- Worst: If BST doesn’t divide the data in half (ie same as linked list). In this case, i + s + d are O(n).

CSVIZ

Preorder Traversal

- There are three different binary search tree traversals that all share a common strategy in how they approach accessing every data.

- Each traversal is a variation of the same recursive strategy of traversing to the depths of each path.

- Traversals essentially accomplish what an Iterable/Iterator in Java does. All that is different is the ordering of the same actions.

- Preorder is a depth-first algorithm

- Useful when making an exact copy of a tree.

Postorder Traversal

- Also depth-first

- Useful when removing leavings from a tree.

- recurses first before looking at the data, whereas preorder looks at data first.

Inorder Traversal

- Depth-first

- Unique for BSTs since it returns a sorted list.

Levelorder Traversal

- Breadth-first traversal strategy

- There are other variations of this concept, but they are all similar to the levelorder traversal.

- Unlike depth traversals that utilize a Stack (the recursive stack), the levelorder traversal utilizes a Queue (with while loop) to access each data in an order sorted by closeness to the root, the level.

Choosing a Traversal

We have covered four different traversals for Binary Trees in this module. It is important to note that these traversal concepts can easily be extended to general trees. After all, three of the traversals are derived from simply changing up the order the "look at node" operation is performed with respect to the recursive calls. The procedures within each traversal are defined for Binary Trees. However, the properties of the traversals are exemplified when specifically examining the data returned from BSTs.

Let's take the time to highlight some of their uses and distinguishing properties. So, what makes each of these traversals unique?

- Preorder: The preorder traversal uniquely identifies a BST, when adding the data to an empty tree in the order given by this traversal. Although we haven't discussed how the add method works in BSTs, you can double check this feature of preorder traversal in the visualization tool. Most definitely, check this feature out after you've learned about the adding procedure. The preorder traversal is a hybrid depth approach that biases towards giving you data closer to the root faster, than data in leaf nodes.

- Inorder: The most notable property of the inorder traversal is that if implemented on a BST, the data is returned in a sorted order. However, please note that the inorder traversal is the only one of the four traversals that does not uniquely define the BST. If the traversal is operating on a general Binary Tree, then it will give you the data on a left-to-right basis (if the tree is properly spaced out when drawn).

- Postorder: The postorder traversal is similar to preorder in that it uniquely identifies the BST. One useful feature of the postorder traversal is that if you were to remove data from this ordering, you would only remove leaf nodes. This particular property will come in handy when you learn the remove procedure of a BST. The postorder traversal is a hybrid depth approach that biases towards giving you data in leaf nodes faster, than data closer to the root.

- Levelorder: This traversal is the black sheep in implementation, but it's also the easiest to understand conceptually. The levelorder traversal gives you the data in an ordering sorted based on the depth of the data. Thus, it is the fully realized version of the preorder traversal where it gives you full control of getting internal nodes before deeper leaves. As you might have guessed, it also uniquely defines a BST.

M5 - BST Operations

We are going to explore the power of the tree data structure, specifically Binary Search Trees, BSTs. We discuss the motivation for BSTs and how they relate to the binary search algorithm. We present efficient procedures for searching, adding, and removing in a BST. Similar to how binary search works, BSTs work by splitting the data into subtrees with about half of the data as the level above it, yielding running times on average.

At the end of the module, we introduce an example of a probabilistic data structure known as a SkipList at the end of the module. Though the basis of SkipLists and their structure is different from BSTs, they are actually very similar in how their search procedure works, which is why we've chosen to introduce them here.

Learning Objectives

- Extend their understanding of tree structures and their impact on efficient search operations.

- Learn efficient procedures for searching, adding, and removing from BSTs.

- Further their understanding of the pointer reinforcement restructuring recursion technique by applying it to the add and remove methods.

- Learn about a simple probabilistic data structure as a gateway to the world of randomization.

BST Searching

- If tree is sorted, then tree is cut by half at each height.

- Efficiency: Avg: O(log n), Worst: O(n), Best: O(1)

Best and Average Case Complexity Analysis

BST Adding

- Similar to BST search

- Use ‘pointer reinforcement’, different from ‘look-ahead’ which keeps track of parent instead of current node and never reaches a null node.

- Pointer reinforcement uses return field to restructure tree. Happens after recursive call.

Pointer Reinforcement Pseudocode

- Public wrapper + private helper method.

- make sure to return the current node at the end to reinforce the pointer.

BST Removing

- Must find element before removing.

- 3 types of removal (zero-child case, predecessor, successor, two child case)

- Easiest, just remove node if node to remove has no child. No restructuring needed.

- Removing node with one child, set pointer of parent node (13) to its child (8).

- Find “predecessor” of node to be deleted, and make that the parent of tree. This is the farthest right node on the left half of sub-tree.

- Find “successor” of node to be deleted, and make that the parent. This is the farthest left node on the right half of sub-tree.

- Resulting tree differs based on using either predecessor or successor strategy.

- The two

i (public, private) are different variables

Pointer Reinforcement Remove Pseudocode

- Dummy node serves as storage data passed in recursive call.

- pointer reinforcement logic same as add

- 2 base cases: if node is null + is node data matches data to remove.

- If no children - return null - sets parent’s pointer to child as null

- If non-null - return child node

- If 2 children - make another call to remove successor (or predecessor)

- Get data from successor by making another recursive call to remove successor node and get data. Then set current node’s data to child node’s data.

END OF PROBLEM SET 1

M6 - Heaps

Let's look at the "cousin" to BSTs in the Binary Tree group, (binary) Heaps. Heaps are a binary tree-type data structure that prioritize access to either the smallest or largest item in the data structure depending on the heap type. In a sense, heaps are a specialist data structure most commonly coupled with the Priority Queue ADT. Whereas, BSTs are a generalist data structure useful for a lot of different operations.

An interesting caveat to heaps is that unlike BSTs, they are commonly implemented using backing arrays. There is an additional shape property constraint for heaps on top of the binary tree shape which lends itself to an array implementation. This allows us the opportunity to explore how an array implementation may work for a tree-type data structure. We'll explore this through three operations: adding, removing, and building a heap from a group of unordered data.

Learning Objectives

Students will:

- Learn about heaps, the most common data structure for implementing the Priority Queue ADT.

- Break down and understand efficient algorithms for adding, removing, and building a heap.

- Expand their knowledge of different tree types structures and orderings, along with the benefits (and difficulties) such constraints bring.

- Experience what it's like to back a tree-type data structure using an array rather than in a linked fashion.

Intro to (Binary) Heaps

Here we present an introduction to a new data structure, (binary) heaps. The term binary is in parentheses because there are multiple types of heaps, and we are discussing a particular heap. Binary heaps are the most common type of heap. Thus, when a heap is mentioned, most often it is usually referring to a binary heap.

Heaps are another binary tree type of data structure with the additional shape property of completeness. We will study this concept in this lesson. Completeness is a very nice property that lends itself to an array-implementation, which is usually faster at a low-level when compared to a tree node implementation. Heaps also have a different order property when compared to BSTs. The heap order property changes the way we need to look at basic operations such as add and remove.

- Heaps must be “complete” - filled left to right with no gaps.

Order Property

In a heap, the parent node is always larger (in the case of a maxheap) or smaller (in the case of a

minheap) than its children. There is no relationship between the children.

Shape Property

A heap is always complete. At any point, each level of the tree is completely filled, except for the

last level which may be filled from left to right with no gaps.

- Min Heap - Smallest element at the root

- Max heap - Largest element at the root

- Backing array doesn’t have any gaps

Heap Operations

- Not designed for search!

- Index 0 never used (makes math easier)

- Only consider add and remove.

- Key Idea: Enforce shape property, then order property. “Up-heaping”.

- The left child of an item in index i would then be at index 2i, and the right child would be at index 2i + 1.

- The parent of an item in index i would be at i/2(this is assuming you are doing integer division).

Adding

- Upheaping (ie. swapping nodes) continues up to root node.

Removing

- You can only remove from the root

- Remove the item at the root, and move the item in the last slot of the heap to the root. This might break the order property, but that is fine.

- Compare the item with the item in the left child and the item in the right child. If either the left child or the right child is smaller (in the case of a minheap) or larger (in the case of a maxheap), swap the item with the smaller/larger of the two children.

- Repeat the previous step until you don’t make a swap or you reach the bottom of the heap.

- Re-ordering takes the most time (like upheaping).

- order property is violated, so we perform a downheap (this is a max heap implementation)

- Continue until order property is satisfied.

Performance

New Operations? New Heaps - Food for Thought

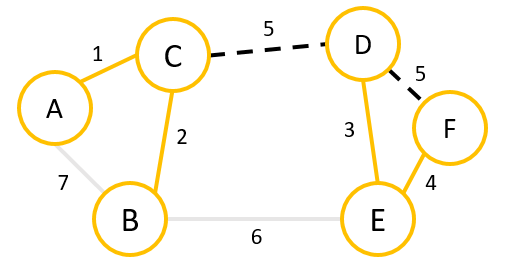

Build Heap Algorithm

- Need to build from bottom up

- index size / 2 gives you the parent

- Start with last index divided by 2 (32). Swap parent with smaller child (32 with 5) and perform a downheap. Downheap looks at swapped child node, if child node is a leaf the subrouting terminates. If child exists, it continues the swapping.

- 71 is swapped with smallest data, continue downheap

- Swap smallest child with 71. Downheap is complete since 71 has a leaf node.

- Reach the root node, both children meet the ordering requirement. Terminate downheap.

- Time complexity is actually O(n)

- Leaf nodes have O(1) cost, and few of the higher nodes have O(log n) cost.

M7 - Hashmaps

Now, we take a look at HashMaps (HashTables), one of the most commonly used data structures in computer science. HashMaps are very useful if we want to search for a specific data in our data structure. This is helpful when we'd like to check for membership of data where we're using the search data as a unique identifier for some other data (in which case the searched data is called a key, and the implemented ADT is the Map ADT), and with the Set ADT.

Looking back at our toolbox, the best time complexity we have for this problem so far is on average 𝑂(log(𝑛)) via BSTs. Remarkably, we can do better, with 𝑂(1) search times on average! In this module, we'll be introducing the big idea of how we achieve this incredible efficiency, known as hashing. We won't be rigorously proving the average efficiency, but we hope to provide you with an understanding of the mechanisms of hashing, the flexibility such a technique brings, as well as some intuition for why the idea works.

Learning Objectives

Students will:

- Learn about the Set ADT and the Map ADT.

- Explore the idea of hash functions, along with how they're used in HashMaps to achieve

𝑂(1) average search times.

- Understand the challenges of collisions, a necessary consequence of how HashMaps work.

- Observe multiple collision resolution strategies at work: external chaining, linear probing, quadratic probing, and double hashing.

Introduction to HashMaps

- Note: backing array of 100

- Mod hashcode by 100 to get compress keys

- Collision may occur with modulo - this is inevitable, but there are ways around it

The Map and Set ADTs

Let's first recap what we know about the Map ADT. Maps are defined by multiple key-value pairs (denoted

<K,V>), satisfying the following properties:

- Keys are unique and immutable, essentially allowing keys to act as search objects for their associated values.

- Values are tied to keys, but they do not need to be unique. There may be multiple key value pairs where the value is the same.

- The keys do not need to have any sort of ordering to them, though they are allowed to. If ordering is desired, then it becomes something called an Ordered Map, which we will not be delving into in this course.

V put(K key, V value): This method adds the key-value pair to the map. If they key is already present in the map, then the value is replaced with the new one passed into the method, and the old value is returned. If the key is not a duplicate, then a null reference is returned.

V remove(K key): This method removes a key-value pair from the map and returns the value associated with the key.

V search(K key): This method looks for the key in the map and returns the associated value. A modification of this operation that is commonly used is to just check if a key is in the map (typically called

containsKey.

Set<K> keySet(): This method returns a Set of all of the keys in the map. We will discuss what a set is below.

List<V> values(): This method returns a list of all the values in the map. The ordering of the list is in no particular order.

If you think back to some of our earlier data structures such as BSTs, you can think of them as implementing the Set ADT or the Map ADT. If we search in a BST to check for membership of data, then it's a Set. If we're looking up some identifier tied to other data (such as an id number tied to a user record), then it's a Map. For most of the examples in this module, we will omit the values and only look at the keys. This is because there is very little restriction imposed on the values, so any search algorithm will depend solely on the keys.

Spec'ing our HashMap

In the video in the previous section, we touched briefly on the idea of collisions, and as you progress through the module, you will find that collisions are the source of most inefficiencies in HashMaps. Most of our time will be spent on how to resolve collisions (collision resolution strategies), but the truth of the matter is that much of the work of a good HashMap occurs even before all of these collison strategies! The old adage "Prevention is the best medicine" applies here very well, so let's take a quick look at some things to consider when designing a HashMap.

Picking Hash Functions

The first thing we're going to look at is hash functions. Formally, a hash function is a function ℎ:𝐾→𝑍, which means a mapping from keys to integers. This step is necessary because our keys might not even be numerical like the phone number example from the video. For example, if our key is a String, then we need a function to convert from a String to an Integer.

As for the requirements of this function, we technically only require that if two keys, 𝑘1 and 𝑘2, are equal, then their hash function evaluations, ℎ(𝑘1) and ℎ(𝑘2) are equal. So, technically, the zero-function, where we map every possible key to 0 is a valid hash function. However, it's not a very good hash function. We want the reverse to be as close to true as possible as well: if the hash function evaluations are equal, then the keys are also equal. In some cases, this may be possible, but in many cases, it may not be possible without infinite memory (or may not be possible at all). After all, what good is a perfect hash function if we can't store it in memory?

Let's take a look at our example of converting String keys into Integers. One reasonable way to do this is to just assign each character a numerical value, then add those values up. For example, if we assigned the letter A the value of 1 and the letter B the value 2, then the string ABAB would have the hash value of 1+2+1+2=6. This is an okay hash function, but it has a weakness. It doesn't take the ordering of characters into account at all, so any string of length 4 with an equal number of A and B characters will collide into the same hash value. One way we can fix this is by taking a page out of our number system and multiplying these values by something based on their position before adding it up. For example, if we multiplied it by powers of 10 based on the position from right to left, then we get 1×103+2×102+1×101+2×100=1212.

This was just one example of a hash function from Strings to Integers, but there are plenty of other reasonable ones as well. In Java, hash functions are written in the hashcode() method, which is why Java always tells you to override it when you override the equals() method!

TLDR on .hashcode():

.hashcode() returns an numeric representation of an object

.hashcode() is an instance method of any object in Java

- In the ideal unrealistic scenario, every distinct object has a distinct hashcode value

- In the real scenario, some objects which are different have the same hashcode which is called a collision

- Objects that are equal by

.equals() have the same hashcode

.hashcode() method which is aimed at minimizing collisions.

Vehicle.java

Other Considerations

Once we've picked our hash function, we also have three other things to consider: compression function, table size, and load factor. The compression function's job is to shrink and shift the output of the hash function so that it fits into the range backing table (array). This is because for most applications, it's not reasonable to have a table of capacity the largest hash function value. The most common compression function you will see is to simply mod by the table length, though there can be others.

Since the compression function often uses the table size in order to "spread out" our data in the specified range, it's also common to have the table size be a prime number in order to minimize collisions due to compression. There are again, other choices for table sizes, but most often, HashMap implementations will "lazily attempt" to use a prime number for the table length without actually checking if it's prime or not. Another popular choice for table sizes is to use a power of two since it makes the modulo operation more efficient (this is out of scope of the course, but prime numbers are the most expensive for divide-type operations, whereas powers of two are efficient thanks to bitmasks).

Finally, we have the load factor, which is defined as the size over the capacity. This parameter has to do with how high we're willing to let the load factor become before we resize the table, and it varies quite a bit in implementation. It's also very dependent on how we decide to handle collisions. The intuition here though is that as the load factor increases, the table will become inundated with entries, and it becomes more likely for a collision to occur. If we keep the threshold low, then we can almost guarantee 𝑂(1) search times, but we may have to resize more often, and we will use more memory than we may want to.

As you can see, a lot goes into designing a good HashMap. There are countless papers and studies out there looking at what hash functions work best in practice and in theory, so it's a very interesting topic to look at! It also speaks to how important these ideas are; these ideas are quite flexible and general, so they can be applied in data structures other than HashMaps.

External Chaining

Now begins our exploration into various collision handling policies. These policies can be broadly categorized into two different types:

- Closed Addressing: A policy where all keys that collide into the same location are stored at that location by some means.

- Open Addressing: A policy where additional, colliding keys can be stored at a different location based on the original location.

What is External Chaining?

- Backing structure: array of LinkedLists → each index can store multiple entries

- “Closed addressing” strategy - entries are put at the exact index calculated (see index 61 in image below).

- For duplicate entries, you need to traverse the entire LinkedLists

Resizing with External Chaining

- When using external chaining, we don’t run out of space. However, long chains impact runtimes.

- Given an backingArray of size 10 and index 0 has 5 K-V pairs as LinkedLists. Load factor = size / capacity = 5 / 10 = 50% (Tip: Don’t think of size as indices that are filled, it’s per K-V pairs).

Linear Probing, Example 1 (Adding)

What is probing?

- Backing structure - Array → each index can store only one entry.

- “Open addressing” strategy - entries may not end up at the original index calculated.

- Linear Probing: If a collision occurs at a given index, increment the index by one and check again.

Index = (h + origIndex) % backingArray.length (h = number of times probed [0 ... N])

Wraparound technique: 50 ends up at first index (idx + 6 steps % 7).

Linear Probing, Examples 2 and 3 (Searching + Removing)

The next operations we take a look at are searching and removing from a HashMap. As it turns out, removing from a HashMap doesn't work if we remove items from the array normally. So, we perform what we call is a soft removal. Soft removals are where we leave the entry there, but mark it with a flag to denote that the entry is removed. Such entries are called DEL markers (some sources refer to them as tombstones ). These are entries that exist in the array in order to assist in HashMap operations, but from the user's perspective, looking at the HashMap as a black box, these entries do not exist at all since the user has already removed them.

Removing from HashMap

- Move through array and find value to remove. Pop the value and replace it with a DEL marker.

- DEL markers typically set as a boolean variable at index. We don’t use

null because empty array indices are the true nulls.

Putting into DEL Index

Probing Scenarios:

- Valid (not null or deleted) and unequal key

- Valid and equal key

- Deleted (DEL)

- Null

- Start at hashed index of remove value (idx = H(1) % 7 = 1). Start incrementing index.

- Save first DEL index if encountered

- Continue moving through array to see if value is found.

- If not, place probed value into saved DEL index.

- If null entry found and DEL index is not encountered, place it in first null index.

Resizing Backing Array (for Linear Probing)

When backing array surpasses threshold:

- Create a new backing array of capacity 2N+1

- Loop through old backing array

- Rehash all cells to new backing array

- Skip over all DEL markers. They no longer serve their purpose.

The Efficiency of DEL Markers

One question to keep in mind, as you consider the use of DEL Markers for removing, is how these DEL markers impact efficiency. The put/remove/search operations all have termination conditions for whether the key is found in the map or not. If the key is in the map, then all is well. However, the more complex problem is when the key is not in the map. There two termination conditions for when the key is not there, whichever comes first:

- We find a null entry in the table to tell us to stop searching.

- We probe for

table.length times, meaning that we've checked every spot in the table already.

0,1,…,11,12 from the table. This leaves us with a final table of size 0 with no null entries, all while never having the load factor be larger than 0.08. This configuration is terrible because it guarantees that any put/remove/search is guaranteed

𝑂(𝑛) performance; just goes to show that DEL Markers worsen the efficiency of our operations. This is also why when we resize, we get rid of any DEL Markers in the process.

There are ways to try to avoid this, such as including DEL Markers in the load factor calculation, or triggering an intentional resize or reconstruction of the table if we know beforehand we'll be removing a lot. We won't be delving into more detail than this, but it is worth keeping in mind the cost that DEL Markers have on performance.

Quadratic Probing

Previously, we saw the most basic of open addressing techniques, linear probing. If you do some examples with it, you will find that linear probing has the problem of developing contiguous blocks of data. These blocks of data can be problematic as they get larger, because any search for data in these indices will necessarily continue until we hit the end of that block.

For example, in the diagram above, indices 3 - 7 are occupied by either data or DEL Markers. If a put/remove/search operation occurs at any of these indices, then the operation continues until it reaches index 8, the green cell, giving a degenerated linear performance. This problem is known as primary clustering (or turtling), and the effect can be mitigated by quadratic probing, which is seen in this lesson.

What is Quadratic Probing?

- Quadratic Probing: If a collision occurs at a given index, add h^2 to the original index and check again. Why? Breaks up clusters created by linear probing.

Index = (h^2 + origIndex) % backingArray.length (h = number of times we've probed [0 ... N]).

Example 1

Solutions to Infinite Probing

What happens when quadratic probing keeps finding occupied indices (infinite probing)? (See value 22 in Example 1)

- Solution 1: Continually resize until a spot is eventually found

- Solution 2: Impose a set of conditions on the table to ensure that this scenario never occurs.

The Complexities of Quadratic Probing

As we saw in the video, while quadratic probing is a simple modification of the linear probing schemes, it brings with it some more complexities and complications with it. For one, it was proposed to solve the problem of primary clustering, but quadratic probing suffers from something called secondary clustering, which is where keys belonging to different indices collide in quadratic steps rather than linear ones. It isn't as obvious as in linear probing since there isn't a huge block of data, but it does still exist.

The most pervasive issue of quadratic clustering is the infinite probing issue seen in the video. There are two approaches to this problem:

- If

table.length probes have been made, but a spot still hasn't been found, then resize the hash table and try again.

- Construct the table so that infinite probing will never occur.

The second option will have to be a bit mysterious since a number theory background is not a prerequisite for this course, but some hashing schemes can eliminate the infinite probing case entirely. For example, if we enforce that our table length is a prime number, and we maintain a max load factor of 0.5, then we can guarantee that it will not happen.

Double Hashing

What is Double Hashing?

- Double Hashing: If a collision occurs at a given index, add a multiple of

c to the original index and check again.

- Why? Breaks up clusters created by linear probing

Index = (c * h + origIndex) % backingArray.length (h= number of times we’ve probed)

c = result of second hash function (linear probing if c = 1)

- Hashing strategy:

- First Hash: H(k), used to calculate origIndex

- Second hash: D(k), used to calculate probing constant.

D(k) = q - H(k) % q

- q = some prime number, and q < N

- Delete flag still required when removing.

Example 1

More thoughts on double hashing

As we saw in the example, double hashing can be a powerful framework, so long as it is implemented carefully and efficiently. With a good secondary hash function (one that spreads out the keys and is relatively independent of the primary hash function), even pathological cases where we have many collisions can be prevented unlike linear and quadratic probing.

We would like to make a few remarks that are a bit more subtle, some of which are out of the scope of the course before moving on:

- Linear probing is a special case of double hashing, but quadratic probing is not. The secondary hash function depends on the key as input, whereas if we wanted the same effect as in quadratic probing, we'd need the probe count as the input. The takeaway here is that the secondary hash function is truly a hash function since it's only input is the key, not the probe count.

- Double hashing might be more computationally expensive than the other strategies depending on what the secondary hash function is. At a low level, computers often have specialized instruction sets that do things like incrementing (which we'd use in linear probing), making those strategies faster if collisions aren't that pathological.

Collision Handling: A Summary

We've covered a lot of ground in this module, the basics of HashMaps, in addition to the four different collision handling strategies. We presented them in the following order: external chaining, linear probing, quadratic probing, and double hashing. This ordering was chosen for two reasons: (1) we went from simpler concepts to more complicated concepts and (2) we went in (rough) order of popularity.

In practice, you will mostly see external chaining and linear probing (or some variation of them). External chaining is very nice because it is an extremely simple idea. If there are multiple collisions, then just attach the new item to a chain at that index. The primary reason you would not want to use it is something we discussed a while back, locality of reference. Computers are designed to access adjacent/nearby memory locations quickly, which the open addressing schemes can take advantage of. On the other hand, external chaining necessary involves dynamic allocation for the chaining, so it cannot take advantage of this. Linear probing is the simplest scheme to take advantage of this, so it's another popular choice.

So, what about quadratic probing and double hashing? We made a few arguments for why they might be useful in their respective lessons, but now it's time to discuss why they might not be very popular.

- Their implementations can be tricky and need to be done carefully. We've had semesters in the past where students implemented quadratic probing. If you ask one of them what the experience was like, they'll probably respond negatively, and for good reason! These schemes can be efficient in terms of collision handling, but their complicated implementations make them unlikely to be used in practice.

- Aim to avoid collisions, not how to fix them. We mentioned this previously, but it's important, so we'll say it again. If you are running into cases where you have lots of collisions, then you're not using HashMaps correctly! The

𝑂(1) performance of HashMaps happens when there are few collisions, not when we have many collisions. So, if you have lots of collisions, work on the hash function and table sizing rather than the collision strategy.

- The number of collisions is not everything, much happens at a lower level. For example, incrementing is an instruction that is used very often, so computers are optimized to do them very quickly. Arithmetic like multiplication and modulo are also relatively fast, but they're still slower than incrementing. Another example is the idea of locality of reference we mentioned above. Quadratic probing and double hashing "skip" around the array unlike linear probing. So, while they still get a speedup since the memory locations are "close," they're not "adjacent" like they are in linear probing, so it's not as good of a speedup.

- Configuration of the table might be constrained to avoid the infinite probing problem. Both quadratic probing and double hashing can run into the infinite probing problem if not implemented carefully. If you try to implement it in a way to completely avoid this, then your table length needs to be a prime number. In quadratic probing (and in some cases of double hashing), your max load factor is be no more than 0.5. These are rather non-trivial constraints! Putting aside the problem of finding a suitably large prime number as well as resizing, a max load factor of 0.5 means that you have to allocate an array where half of the space is never used, which can be a non-starter in many contexts.

𝑘-independent hashing interesting. This concept analyzes the contexts in which we can guarantee 𝑂(1) performance (at least in expectation). But enough theory-crafting, let's take a look at what some popular programming languages use in their standard implementations for HashMaps.

Food for Thought: What do actual programming languages use?

Let's look at three different languages that have some standard implementations: Java, Python, and C++.

- Java's implementation is the HashMap, which uses a variation of external chaining. It starts off with external chaining, but if a chain reaches a certain threshold in size, then it will instead use a balanced BST (we'll cover self-balancing BSTs in the next course), sorting based on the hashcodes and the natural ordering imposed by Comparable if implemented. This threshold approach is used because for small chains, the overhead of a BST is not worth it since the performance costs are similar for small 𝑛. The max load factor is by default 0.75.

- Python's equivalent is the dictionary. There are variants, so let's look at the CPython implementation. This implementation uses a variation of an open addressing strategy like linear probing. It's difficult to describe in detail without getting into the nitty gritty, but it uses a linear recurrence (rather than just incrementing like in linear probing) to determine the probe sequence. Then, it adds a "perturbation" to the recurrence to change how it probes for common failure cases. The table also uses powers of two rather than prime numbers, and the max load factor is 2/3. The link above is worth taking a look at if you're interested; the documentation gives some interesting commentary on the design decisions made in the implementation.

- For C++, we have the unordered_map, which uses external chaining. An interesting thing about this is that the max load factor defaults to 1.0, which is a nice reminder that external chaining is the only strategy we've seen where the load factor can exceed 1.

Food for Thought: A Hashing Application: Bloom Filters

The only example of a hashing based data structure/algorithm that will be seen in this course sequence is what has been covered thus far for HashMaps in this module. However, hashing is a very popular and flexible idea which is used in many different applications. We would be remiss if we did not look at any of these applications. Let's take a brief glimpse at a data structure that uses hashing, bloom filters.

We want to set the scene first to motivate bloom filters. Suppose we are given a database that hosts a lot of tagging information. This database takes in some text input, then it returns, say the first 10 results with that tag sorted by some metric, back to the user. This database is hosted on some server that is relatively far away from you, and your internet speed is slow.

In this context, performing a search can be an expensive operation (the delay to send search request, search the database, and receive the reply may take a while). We would like to avoid sending the request if the search would yield 0 results. Is this possible without hosting the entire database closer to you? The answer is yes! (But you knew that already didn't you?)

Rather than answering the question of "Is this tag in the database?" correctly all the time, what if we allow incorrect answers rarely? More specifically, let's say we allow false positives, which is when the tag is not in the database, but we incorrectly output that it is. A bloom filter does precisely that.

In the flow chart above, we have three possible outcomes. If the bloom filter returns no, then we can be sure the answer is correct. If the bloom filter returns yes, then it could be wrong, so we will go ahead and send the search request. In the true positive case, the actual cost is high, but that cost was necessary, so the opportunity cost is low. In the false positive case, the actual cost is high when the search was not necessary, so the opportunity cost is also high. If we can make false positives rare, then on average, we'll be sending requests mostly when necessary, and not sending requests for most negative answers, which is good!

How does a bloom filter achieve this? Why, hashing of course! Since our database is very large, we don't want our backing table to actually store 𝑛 items for our bloom filter. Instead, we'll have use a bit array of length 𝑚 where 𝑚 can be set independently of the number of items, and the array starts with all entries as 0. Additionally, rather than one hash function, we'll need 𝑘 hash functions (again, 𝑘 can be set as you want). To make things more precise, these hash functions are ℎ1,…,ℎ𝑘, where ℎ𝑖:𝐷→{0,1,…,𝑚−1} for all 𝑖=1,…,𝑘, and 𝐷 is our data space.

When adding a new tag to the database, we compute the 𝑘 hash functions for the tag, then we set all of those indices to 1. For querying to see if the tag exists, we compute the 𝑘 hash functions, and we check if all of those indices are 1. If any are 0, then we know for sure the tag isn't in the database. If they're all 1, then it's possible for a false positive since an index could've been flipped to a 1 bit due to a different data. Removing from a bloom filter is possible, but it requires other modifications.

We won't go into performance here, but with the proper setting of 𝑚 and 𝑘, along with well-chosen hash functions, bloom filters do a very good job with less than a 1% false positive error rate. Hopefully, this demonstrates to you just how powerful and flexible an idea hashing is!

CS1332 - Secure Computing Considerations

Deletion versus Purging & New Memory Acquisition

Associated with Module 1: Arrays and ArrayLists

The most basic operations for many of our data structures involve adding elements, accessing those elements, and (eventually) deleting those elements. Many of our students don't realize that many complex systems often "delete" data elements simply by removing the user's ability to access those items through the normal interface, while the actual data still resides in memory. Since the actual data might be sensitive, then it's important to understand the difference between deletion (i.e., simply removing access) versus purging as deliberately overwriting and/or modifying the sensitive data so that it is effectively unreadable if accessed later by unauthorized parties. This can be demonstrated in many of our homework assignments where we require the students to overwrite the to-be-deleted values before modifying the access structures themselves. We could further expand on this by having the students measure the increased number of steps needed to effect purging versus deletion, and discuss how this impacts the overall efficiency of the data structure operations.

This can also be tied into the acquisition of new memory. In many cases, a request is made to the operating system when new memory is needed. One example is the Java Memory Model, where new() requests return a reference to a block of memory with the requested size, but only if such a portion of memory is free. From a security and functionality perspective, it's important to ensure that a new block has actually been assigned before attempting to dereference the results. Also, the Java Memory Model normally purges any potentially sensitive data by overwriting the contents of the portion of memory being returned with zeros.

Pointers and References

Associated with Module 2: Pointers, References & Singly Linked Lists

We make a general distinction between pointers and references. Pointers refer directly to locations in memory. In languages (i.e, C) that allow use of "generic pointers", the flexibility of being able to dereference arbitrary memory locations also allows potential misuse by programs and processes that (attempt to) access memory locations for which they are not authorized. Some languages (e.g., Java) don't support this "generic pointer" approach, and instead provide references. With references, only three general types of operations are allowed: (1) an object reference can be assigned "null", which effectively means that there is not any reference being made to a valid object; (2) an object reference can be assigned to a new() object, where the operating system creates the new instance of the object in memory (barring any exceptions or other anomalous conditions); or, (3) an object reference can be assigned to an another existing object reference (normally of the same type), such that the object will then have two or more references (i.e., "aliases"). The intent of references is to avoid some of the security- and memory management-related problems of allowing generic pointers.

Hashing

Associated with Module 6: Introduction to HashMaps and External Chaining (Closed Addressing)

We use the term "hashing" to define the general process of using some set of mathematical transformations (e.g., formulas) to convert the value of an element (i.e., "key") into the address where that key should be stored for later access/lookup. We will discuss how the term "hashing" is also used to refer to a different set of transformations for a given element. More specifically, users are often advised to "check the hash" of a file that they've downloaded to ensure that file's authenticity. In these cases, a hash function is used to generate a "signature" for the given element, which is often a file, and where the file is often significantly larger in size than a key. Also, the desired properties of the "security-based" hash result are different. For the "addressing-based" hash formula, the main concern is generating as much of a uniformly distributed set of addresses as possible, to ensure that elements are distributed as evenly as possible across the address space as possible to minimize collision effects as well as the average access/lookup times. For the "security-based" hash formula, the main concern is that the hash result should be extremely sensitive to any changes in the file, and yield a (clearly and distinctly) different result if the file's contents have been modified. Given that many Computer Science terms are "overloaded" in practice, it would be worthwhile for students to recognize this distinction in usage.

Randomness

Associated with Module 7: SkipLists & Probabilistic Data Structures

The algorithms that we study are mainly deterministic - each step of the algorithm leads to a single, distinct set of changes to the computation state. We will discuss how the properties of randomness can be leveraged in the implementation of various algorithms (e.g., Skiplists). From a performance standpoint, the randomness can be used to ensure that the elements being stored are distributed to across the data structure to support a relative fast O(log n) access/lookup time.

And from a security standpoint, the use of randomness makes it significantly more difficult for an adversary to insert elements into the data structure in a way that will deliberately degrade the performance. For example, inserting elements into a Binary Search Tree (BST) structure in sequential (or reverse sequential) order will cause the access/lookup performance of the BST to degrade towards that of a list-like structure - from O(log n) to O(n). Similar "attacks" against a Skiplist, however, would be unsuccessful.

M8 - AVLs

Module Overview

The biggest drawback that we encountered for BST operations was that the height of a BST can degenerate to

due to imbalance in the tree. We left the idea of a "balanced tree" intentionally vague back then because there are different ways to define balancing.

In this module, we'll be covering a sub classification of BSTs called a AVL Trees, which are self-balancing trees. The name of the tree itself comes from its creators Adelson-Velsky and Landis. AVLs formally define what a "balanced tree" is, and they impose the additional restriction that by the end of any operation, the tree must remain balanced. For us, we'll be looking at how this applies to the add and remove operations specifically, and how the pointer reinforcement technique helps us implement the needed mechanisms (known as rotations) in a clean and intuitive way.

Learning Objectives

Students will:

- Refresh their knowledge of BST operations as well as pointer reinforcement, a technique to implement simple modifier operations cleanly.

- Gain familiarity with how AVL trees formally define their balancing scheme along with what a balanced tree is/is not under this definition.

- Learn about the various rotation types of an AVL, and how these rotations fit into the general add/remove operations of a BST.

- Better their maturity of recursive concepts by implementing rotations using pointer reinforcement.

Intro to AVLs

Now, we can begin to explore AVL trees by looking at how we define the structure of an AVL. Since AVLs are a sub classification of BSTs, they share the same order and shape properties. However, AVLS have an additional shape property to restrict the height of the tree. Let's take a look at how AVLs define balancing through the concepts of heights and balance factors.

Tips:

- You never need to use children’s balance factors.

- Leniency (-1, 1) is there because a perfectly balanced binary tree is rare.

- Positive BF means node is left heavy, negative BF means node is right heavy.

The Definition of Balanced for AVL Trees

In the video, we saw that balanced trees for an AVL satisfy the following definition:

|node.balanceFactor|≤1 for every node. We also saw a brief justification as to why we chose 1 rather than 0. There are many configurations where it's just not possible to have a perfectly balanced tree. For example,

As we see in the diagram above, we have a perfectly balanced tree with 𝑛=3 (it's so beautiful!). However, this configuration can only happen when 𝑛 is of the form 𝑛=2𝑘−1. Obviously, by adding one more data leads to an unbalanced tree in some way. Thus, we must accept some imbalance in the world of AVLs. The goal is to minimize the sum of the depths of all nodes, which in turn minimizes the imbalance encountered in the tree.

In the diagram below, we add 2 to the original tree. The tree on the left is BST. However, by including the balance found in AVLS, it leads us to the tree on the right.

There you go! Perfectly balanced, as all things should be. But wait. At what cost? We got a perfectly balanced tree by minimizing the sum of the node depths, but look at what happened. All the data noted in red are data that moved while maintaining the order property of a BSTs. The balance process made a single add operation, an 𝑂(𝑛) operation, just to update the tree, which is exactly what we were trying to avoid in the first place.

So, maybe minimizing the imbalance isn't the best thing to do. If we tolerate a little more imbalance, then we begin approaching the definition of balanced for an AVL. AVLs allow the depths of leaves to differ by at most 1 (which is what the balance factor definition says). If we "lazily update" by adding to the natural spot in a BST, then we can significantly save cost because updates will not occur as often. When an update does occur, it will be very efficient as is shown in the next lesson. AVL trees hit that sweet spot between allowing some imbalance while keeping operations efficient. It can in fact be shown that the height of an AVL tree is at most 1.44 log(𝑛), which is a pretty tight tree!

AVL Single Rotations

Properties

- Left rotation: Node BF is -2, and right child has a balance factor of 0 or -1.

- Result: the top node becomes the left child of the middle node.

- Right rotation: Node BF is 2, and left child has balance factor of 0 or +1

- Result: the top node becomes the right child of the middle node.

- You must always update the height & BF of the former parent (e.g. node A) before doing the same for the new parent.

Rebalancing after removing a node

- Use standard BST method to remove a node and repair links

- Update BF of its parent.

- Determine is BF is imbalanced (bf > 1 or bf < -1) and needs to left/right rotate.

- bf < -1 = left rotation

- bf > 1 = right rotation

- Apply rotation.

- Set parent node’s left/right pointer to child node’s left/right child (depending on rotation method)

- Set new parent node’s left/right pointer to former parent node

- Update height and BF of affected nodes.

- Keep in mind when updating 2 child cases, you may need to update and possibly rebalance on the way back up the predecessor or successor node. Doing this ensures a balance as we bubbled up the tree.

Step 2 - BF of parent node is ←1. Apply left rotation

Step 3: Link parent node 2 to left child (node 3) of child node (node 4)

Step 4 - Link child node 4’s left child to be its former parent. Update weights.

AVL Double Rotations

Properties

- Left-right Rotation: Node BF is 2 and left child’s BF is -1

- Result: top node becomes the right child of the bottom node, and the middle node becomes the left child of the bottom node.

- Right-left Rotation: Node BF is -2 and right child has balance factor of 1.

- Result: top node becomes the left child of the bottom node, and the middle node becomes the right child of the bottom node.

- If a rotation is done, the heights and BF of involved nodes are updated again.

- Right rotation on child

- Left rotation on parent node

- Left rotation on child

- Right rotation on parent node

- Original height of an unbalanced subtree will be restored after a rotation due to adding data.

- Single rotation: O(1), since they’re only concerned with updating 3 nodes at most (unbalanced node, its child, and grandchild)

- Double rotation: O(1), since they’re comprised of 2 single O(1) rotations

Example: add(54)

1) Add 54 to BST

2) Update imbalance

- Leaf nodes will have BF of 0

- Increase height of parent node by +1 and BF by +1 (if only one child exists)

We observe that node 50 is unbalanced.

4) Apply double rotation if parent node is >= 2.

Parent node is -2

Left rotate on 50.

Update node 44’s BF + height

Right rotate on 68

Update BF + Height

Node 62 moves up, 50 becomes left child.

Node 62 moves up, and 68 becomes right child.

Update BF + height. Return node 62 as node 44’s right child.

Rotation Table

In the past, students have told us that the table presented in the video for rotation types is very useful as a study tool. We provide it here so that you can reference it as needed. Do keep in mind that the shape shown is the simplest case; there will likely be children nodes or entire subtrees in an actual implementation that need to be properly managed.

Qs: What needs to be changed with a BST to become an AVL tree

- Where does the update code go in the add and remove methods?

- After the add / remove action is made, after the recursive call.

- What is returned when pointer reinforcement is used? What is returned from each rotation?

- How is the balancing information updated? What differences are there in terms of the heights compared to BSTs?

- BF update after each rotation.

- New root of the rotated subtree?

Logistics of an AVL

There are some final logistics behind the functionality of an AVL tree based on the rotations that need to be covered.

The Number of Rotations per Add/Remove

Let's get a better handle on how these rotations and updates occur in an AVL tree. Based on the algorithm, we recurse down the tree to find the location to add/remove. Once we've done the standard BST add/remove operation, on the way back up the tree (unwinding the call recursion), we perform updates to the parent(s) node(s). The height and balance factor is updated for every node on the return path up, but a rotation won't be needed at every node. Each rotation is

𝑂(1), and so is every update, but it might be a good idea to see how often these rotations actually occur. We know that the number of rotations can be at most 𝑂(log(𝑛)) since the height is 𝑂(log(𝑛)), but perhaps the number of rotations is far less than this.

That is precisely what ends up happening with the add operation. As it turns out, each add operation will trigger at most one rotation. This is because when adding data, any rotation will reset the unbalanced subtree to have back its original height. On the other hand, any remove operation can trigger𝑂O(log(n)) rotations in the worst case; remove operations do not have the same nice property. When a rotation is performed due to a removal, the new height of the unbalanced subtree may not regain its original height, propagating height changes up the tree.

Let's try out an example with the add method. The steps involved here will be detail oriented, so take things one step at a time and make sure you understand each step of the reasoning before continuing to read! Consider the diagram below, where we are seeing the first rotation triggered by an add operation up the tree. We can do a similar reasoning for the other rotation types, so let's keep it simple and assume it was a right rotation. We will derive that each of these heights and balance factors shown must be as shown by only assuming that the height of node is ℎ+1 and that a right rotation is necessary (so 𝐶's balance factor is 2).

We immediately know that the height of node 𝐵 is ℎ and the height of 𝑇4 is ℎ−2 due to the balance factor and height of 𝐶. Additionally, this imbalance was caused because of the data added. If any subtree's height changed, it was because the height increased. This also means that the new data must be in the subtree of 𝐵 rather than 𝑇4. The balance factor of 𝐵 can be 0 or 1, but we've written in the image that it must be 1. Why is this? Well, we only added a single data. This means that only the height of 𝐴 or the height of 𝑇3 could have increased, not both, so the balance factor of 𝐵 cannot be 0 and must be 1 (because it's a left rotation). This implies that the new data must be in the subtree of 𝐴 (if it was in the subtree of

𝑇3, then the tree would've been unbalanced before the add), giving heights of ℎ−1 for 𝐴 and ℎ−2 for 𝑇3.

After the rotation, 𝑇1,𝑇2,𝑇3, and 𝑇4 are completely unmodified, and, thus, their heights are unmodified. The heights of 𝑇3,𝑇4 determine the height and balance factor of node 𝐶, which is now ℎ−1 and 0 respectively. The height of 𝐴 is also unchanged. This allows us to compute the height and balance factors of the new root 𝐵, which are ℎ and 0 respectively.

This is exactly what we were looking for because the height of this unbalanced subtree was ℎ+1, and before we added the new data, the height was one less, which is ℎ. We've restored the original height, so the rest of the nodes up the tree will not change heights and balance factors!

The Efficiency of an AVL Tree

AVL trees guarantee that the height of the tree is 𝑂(log(𝑛)), so operations like search, add, and remove now have a worst case of 𝑂(log(𝑛)) since the balancing is all done in 𝑂(log(𝑛)) time along the same path taken to get to the location. To contrast, this is relative to the worst case of 𝑂(𝑛) for those same operations in a normal BST. Additionally, since the height is stored in each AVL node in order to maintain balancing information, computing the height of an AVL tree is now 𝑂(1) as opposed to 𝑂(𝑛) like before. This all seems like a straight improvement compared to a BST, but there are some caveats and asterisks here.

We need to keep in mind that when analyzing the efficiency of some tree operation, we need to be cognizant of how it might differ between an AVL and a BST, both efficiency and correctness. For example, traversals would be

𝑂(𝑛) for both since the balancing structure doesn't change the algorithm or efficiency. However, if an operation would modify the tree structure in some way, then it may be harder to do in an AVL since we must maintain proper balancing by the end of the operation. For example, removing all leaves in a BST can be done reasonably easily in

𝑂(𝑛) time using a single traversal. For an AVL, this may not be so obvious anymore since removing nodes may trigger rotations, which will change the leaves of the tree midway. In other words, correctness may be harder to achieve due to rebalancing, which may worsen the time complexity.

If the main things we're doing are adding, searching, removing, and traversals though, then we're golden!

Food for Thought - Self-Balancing BSTs

Self-Balancing BSTs: There's Two!?

When you look up self-balancing BSTs, you will most likely see two types of BSTs come up: AVL trees and Red Black trees. This course does not cover Red Black trees (perhaps in the future if there is sufficient demand, but there are currently no plans for it), primarily for two reasons:

- We see two types of trees in this course with complex operations and balancing schemes (AVLs and (2, 4) trees that we'll see next module). If you can make it through both of these types of trees and understand their operations, then you shouldn't have much trouble learning Red Black trees. Many of the concepts such as rotations will carry over, so we leave it to you if you are interested.

- We want to keep the material paced well for learning while preventing course length from exploding too much. If we wanted to also cover Red Black trees, we'd probably want to break these three tree types up to prevent the course from becoming too monotonous, and that'd probably extend the data structures part of the course by another 2-3 modules. If you make it through all of these modules, you've probably learned enough so that you can probably go off on your own to learn other interesting data structures!

We mentioned earlier that AVL trees can be shown to have a worst case height of around 1.44log(𝑛). You may wonder how do Red Black trees fare against this? Red Black trees can be shown to have a worst case height of around

2log(𝑛)). In other words, the balancing of a Red Black tree is looser than an AVL tree. Because of this, if you know you'll just be performing a lot of lookups, you'd prefer AVL trees of the two.

AVL trees usually store balancing information like heights or balance factors, whereas Red Black trees only store one bit, which is the "color" of the node, red or black. This may give Red Black trees the edge in memory, but AVL trees can be optimized to just store the balance factor, which is 2 bits. Red Black trees tend to be better in terms of general purpose, which is why they are the more commonly implemented of the two, but the differences between the two are not that significant in terms of performance for most cases.

A performance analysis by Ben Pfaff back in 2003 studied 4 different BST variants in a variety of settings, so you might find that interesting. It's an experimentation paper, so it's fairly readable, and it's interesting to see in what contexts each BST type excels. We'll cite it in the module review.

You may have noted that we said 4 BST variants. These are the regular BST, AVL, Red Black, and Splay trees. We'll also briefly discuss splay trees in the next module, which are one of the more interesting BST variants because they yield efficient operations without maintaining strict balancing!

M99 - SkipLists

Errata: Slide should say, “If data > right, then continue moving right” and “If data < right, move down a level”

- Add 25 between 20 and 45 at bottom level

- Once found, LinkedList removal removes 45 from all levels.

- Search/Add/Remove: Worst case O(n) is when all nodes live on level 0. Essentially becomes a LinkedLIst.

- Space: Worse case O(n log n) when all nodes are promoted to top level, results in massive grid.

- Avg Case O(n) bc converge to geometric series

- In the case of adding, if nothing has been promoted, the head as at level 0, and we add a new minimum data.

The Purpose of a SkipList

If you are like most students, you may come out of this lesson thinking "Cool, but what is the point of SkipLists, and why are they placed here categorically?" This line of thinking is certainly valid and one we hope that you take as you try to piece together the big picture of this course. To answer these questions, we give a few different answers, which we hope will stimulate some interesting thought and discussion.

- SkipLists can be useful for simple concurrent access operations: Concurrent access is the scenario where you have two users/machines/agents working with the same data structure simultaneously. In a BST, imagine if one user called a remove operation, while another user was searching for some data. If the remove operation begins, and it ends up being a two-child case, then while the pointers are being relinked, the search may occur in the tree while it is being updated. The search may end up yielding the wrong result due to looking at the tree when it wasn't ready for searching.

SkipLists work decently well with this problem because as long as we move pointers in the correct order, even if we are in the middle of updating the SkipList, the end result of a search will be correct since we won't skip data by accident. However, we should note that for many reasons, SkipLists are not often the tool of choice when it comes to concurrent access operations; we just think it's a cool way to introduce the idea of concurrent access if you've never seen it before.

- SkipLists are simple to implement: We will see later on in the course multiple variations of BSTs that have some kind of balancing scheme in order to help search times. However, these balancing schemes can be tricky to implement, whereas SkipLists are relatively simple to implement and understand. It still has a worst case behavior similar to BSTs, but its reliance on probability can often allow it to perform comparably to these balancing schemes.

- SkipLists are a nice introduction to randomization in computing: This is probably the truest answer for why these are taught in many introductory courses and placed in a similar manner. The fact is that SkipLists are one of the more niche data structures taught in this course. This is in contrast to the rest of the course, which takes a generalist approach, allowing you to build up your toolbox of sledgehammers that you can bring out in a variety of situations and contexts.

Randomized computing is an extremely interesting area that is actively being researched and used today, but as you may expect, much of it is locked behind a decently hefty mathematical background and maturity. So, although we won't get to see much randomization in this course, SkipLists are an example of a randomization concept that is simple enough to see in an introductory course like this.

Food for Thought - Randomness In Computing

As we mentioned in the previous SkipList unit, we won't be able to spend much time on randomized computation due to insufficient mathematical background. We would like to take the time to question the use of randomness in computing at all. Randomness is a concept used to model real-world phenomena such as coin tosses or dice rolls. It makes sense to us that if we flip a coin that is equally weighted, we expect about half of the flips to be heads, which we call a "probability of 1/2."

But does it make sense to assume that computers should have access to randomness? To put it another way, is it fair to assume that computers can model random behavior like flipping a coin? The reason we're doubting this is because computers operate in a deterministic way, meaning that the code they execute will always proceed the same way (no randomness). So, can computers model true randomness?

Another related question is whether or not randomness "helps" computation. That is, if we assume that we have access to randomness, can we come up with solutions to problems that are "better" than purely deterministic ones (i.e. faster or more accurate)? We hope to address these two questions here in a limited capacity.

- Access to randomness in computing: This is an open question in computer science, whether or not computers can generate truly random results. If you call a random number generator (RNG) in most languages, it will use what is called a Pseudo-RNG (PRNG), which generates numbers in a completely deterministic way that appears random for most applications. Usually, they have some base number called a seed that is used in large and complex arithmetical operations to "spread out" the numbers in order to appear random. The problem with this approach is that since it's completely deterministic, if you know the seed and the model used, then you can reverse-engineer all of the randomness the PRNG gives.

Some RNGs make use of real-world phenomena that are very complex in nature such as atmospheric noise or thermal fluctuations in the air as a source of randomness. Whether these phenomena can truly be called random is a valid question, but they avoid the reverse engineering problem that PRNGs have.

- Usefulness of randomness in computing: Yet again, this question is a major open question in computer science. There are many examples where problems have nice, simple, efficient, and elegant randomized solutions which have seemingly no deterministic counterpart. For example, consider the problem of primality testing: checking whether or not a number is a prime number. We've known for a while of several randomized solutions that are simple to implement and efficient, but it was a major hurdle to find a deterministic solution. Such a solution was eventually found, but it's much more difficult to implement and not as efficient.

For many of these problems, it appears that randomness plays a key role in solving them efficiently. Perhaps we haven't found the right ideas, or haven't thought out of the box to come up with a deterministic solution. We have nothing that proves for sure whether or not randomness improves or doesn't improve the power of computing. Thus, it remains an open problem.

M9 - (2-4) Trees

In this module, we continue our exploration of trees by taking a look at a very different type of tree from what we've seen before, a non-binary tree type known as (2, 4) trees. These (2, 4) trees inherit a similar order property found in BSTs, but (2,4) trees have flexible nodes that allow for multiple data and multiple children per node. (2,4) trees have a more strict shape property, requiring that every leaf node resides at the same depth. Thus, making the depth of (2,4) trees logarithmic.

(2,4) trees are the last type of tree we will be covering in the course sequence, and it also marks the end of the data structures half of the sequence! With the completion of this module, you'll have built up a formidable toolkit of data structures that you can draw from when problem solving in your programming work. Of course, we cannot cover every data structure that might be useful. But with the knowledge and maturity you have built from this course sequence, we know you have the skills to tackle other data structures on your own as you encounter them in your work. Nevertheless, this module also features some optional "Food for Thought" glimpses at splay trees and Red Black trees, which are other types of BSTs that maintain their operations through various rotations.

Learning Objectives

Students will:

- Encounter their first tree type that is not binary, (2, 4) trees.

- Gain familiarity with the order, shape, and node properties of (2, 4) trees, and why they lead to logarithmic search times.

- Diagram the add operation and its procedure, along with how it fixes overflow violations via promotion/split operations.

- Understand the complex inner workings of the remove operation, along with how it fixes underflow violations via transfer and fusion operations.

Intro to 2-4 Trees

We begin this lesson by looking at the properties of (2, 4) trees (sometimes referred to as 2-3-4 tree). These trees are a generalization of BSTs because they allow multiple data to be stored in sorted order in a node, anywhere from 1 to 3 data. (2, 4) trees are a special case of multiway search trees, which allow multiple data to be stored in a node in generality. They're called multiway search trees because if we have 𝑥1<𝑥2<⋯<𝑥𝑚 in a node, then this defines

𝑚+1 possible areas for data to be. So, a node with 𝑚 data can have 𝑚+1 children, which hold data in the specified range (the namesake of (2, 4) trees is that every node can have between 2 and 4 children).

Multiway search trees that enforce a strict balancing scheme are known as B-trees. As we will see, (2, 4) trees are always perfectly balanced in terms of tree height, so they are also a special case of B-trees.

Strict shape property achieves O(log(n)) operations.

Balancing in a (2, 4) Tree

In the AVL module, we saw the we cannot always maintain perfect balancing of heights in a BST due to the problem we see below. Perfect balancing can only occur for certain values of 𝑛.

Well, (2, 4) trees solve this problem by allowing more than 1 data to be in a node. Naturally, the time complexity of searching now depends on both the height as well as the number of data allowed per node. Allowing up to 3 data is a nice compromise between the two costs while allowing us to perfectly balance the tree efficiently. Of course, there will be imbalances in the sense that some nodes will be more filled than others, but from the perspective of heights, the tree is perfectly balanced.

Searching in a (2, 4) Tree

In a BST node, there was only one data, 𝑥. The way we decided whether to go left or right was to check if our new data

𝑦<𝑥 or 𝑦>𝑥. Searching in a (2, 4) tree follows the same principle. Suppose we have a 4-node as shown below. (The 4-node contains 3 data and has 4 children.)

The direction in which we search for the data is determined by "area" of the search where the data may be located. The decision process is similar for 3-nodes, and, of course, 2-nodes. Let's look at an example. Suppose we have the (2, 4) tree given below and want to look for the data 16. Then, the path followed in the diagram is precisely the search path we would take. The red numbers denote where we compared the data, and the green numbers denote where we found the data.

Food for Thought - B-Trees: Querying Large Databases

As we mentioned, (2, 4) trees are a generalization of B-trees that allow up to 4 children per node. Another way of saying this is that a (2, 4) tree is a B-tree of order 4. For those curious about the name of B-trees, there is actually no official consensus for what the B stands for, so assume it to be whatever you like!

As we mentioned, B-trees have a tradeoff that they inherently work with. The higher the order of the tree, the shorter the tree. However, the higher the order of the tree, the more data per node, and therefore more operations to process each node. In short, we're just shifting the cost of searching from traversal of the tree to a sorted list-like structure in a node. That begs the question, what good is that? As it turns out, B-trees are very commonly used for large databases!